How to Prioritize Tasks: 5 Proven Methods for Professionals and Teams

How to prioritize tasks using 5 proven methods: Eisenhower Matrix, MoSCoW, RICE, time-blocking, and Eat the Frog. Includes comparison table and daily routine.

Most professionals do not have a prioritization problem. They have a bias problem. Recency bias makes the last request feel most important. Urgency bias treats every deadline as a crisis. The mere-urgency effect, documented in a 2018 Journal of Consumer Research study, shows that people consistently choose urgent tasks over important ones even when the important tasks offer objectively better outcomes.

Learning how to prioritize tasks effectively is less about finding the right to-do list app and more about building a repeatable system that counteracts these biases.

After applying prioritization frameworks across 80+ operations and transformation engagements -- from two-person workstream teams to 200-person portfolio reviews -- we have tracked which methods produce the best outcomes and which create the illusion of structure without changing behavior.

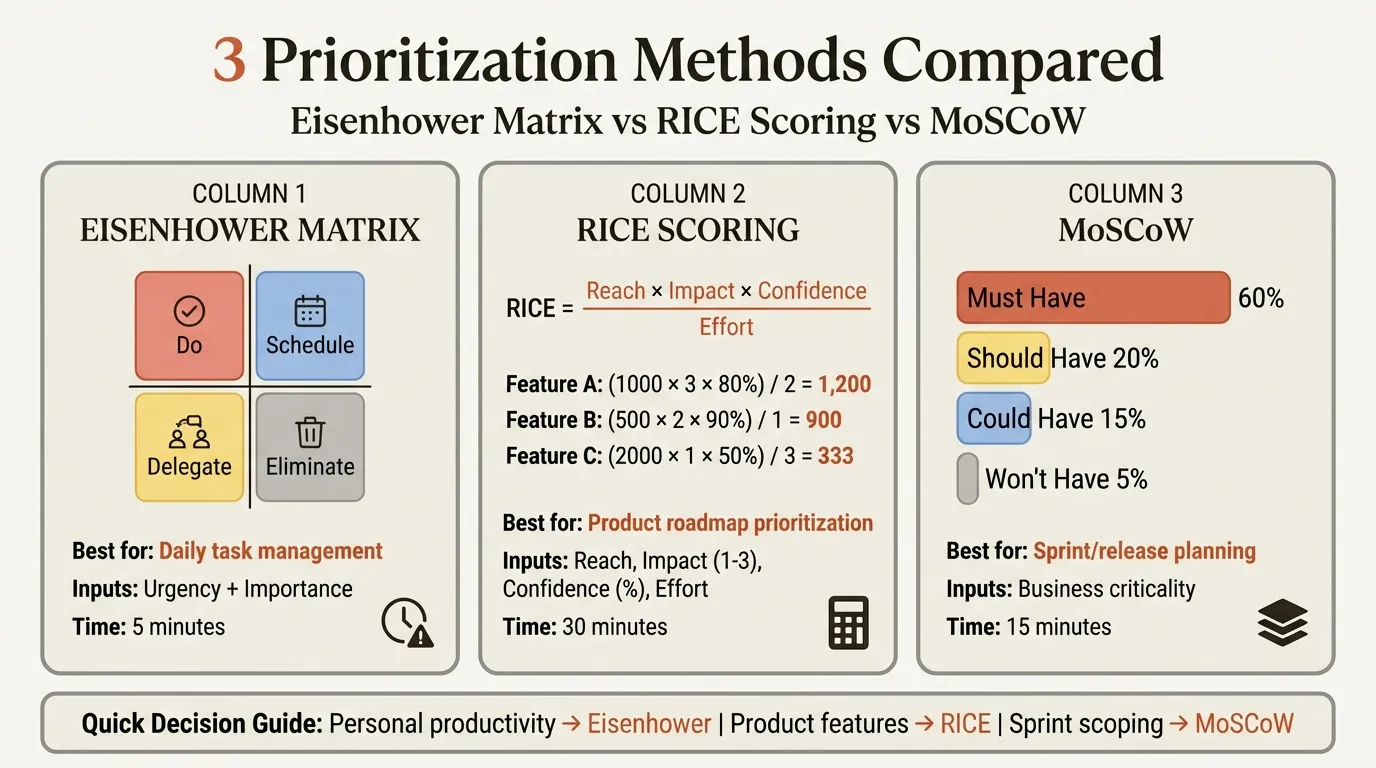

This guide goes deep on the three frameworks that matter most -- RICE scoring, MoSCoW, and the Eisenhower Matrix -- with full worked examples, a method selection guide based on context, and a real-world weekly workflow that ties them together. For a broader view of strategy frameworks, see our Strategic Frameworks Guide.

Why Most People Prioritize Tasks Poorly#

Three cognitive biases undermine prioritization more than any lack of tools or discipline:

Urgency bias. A task with a deadline feels important regardless of its actual impact. The result is a schedule dominated by urgent-but-unimportant work while strategic projects never get started.

Recency bias. The most recent request displaces the task you planned. A Slack message at 2 PM overrides the high-impact work you scheduled at 9 AM. By end of day, you have responded to six requests and completed zero planned work.

The planning fallacy. Kahneman and Tversky showed that people underestimate task duration by 30-50% on average. Your "prioritized" list of 12 items was never realistic, and by 3 PM you are triaging rather than executing.

The methods below counteract these biases by forcing explicit criteria, separating ranking from execution, and limiting work-in-progress so that priority translates into action.

RICE Scoring: Full Worked Example#

RICE is a quantitative framework that scores items across four dimensions: Reach (how many people it affects per quarter), Impact (how much it moves the needle per person, scored 0.25 to 3), Confidence (certainty of estimates, 50% to 100%), and Effort (person-months required).

Formula: RICE Score = (Reach x Impact x Confidence) / Effort

The Scenario#

A B2B SaaS product team has 8 backlog items competing for a 4-person engineering team's next quarter. Total capacity: 12 person-months. The product manager scores each item in a 20-minute session.

| # | Initiative | Reach (users/qtr) | Impact (0.25-3) | Confidence | Effort (person-mo) | RICE Score |

|---|---|---|---|---|---|---|

| 1 | Automated onboarding flow | 500 | 2 (high) | 80% | 3 | 267 |

| 2 | Bulk CSV import for admins | 50 | 3 (massive) | 90% | 1 | 135 |

| 3 | Dashboard redesign | 200 | 1 (medium) | 100% | 2 | 100 |

| 4 | SSO integration | 120 | 2 (high) | 70% | 2 | 84 |

| 5 | Mobile push notifications | 300 | 1 (medium) | 50% | 2 | 75 |

| 6 | API rate limit overhaul | 80 | 2 (high) | 90% | 2 | 72 |

| 7 | Custom report builder | 60 | 2 (high) | 40% | 3 | 16 |

| 8 | Dark mode | 400 | 0.25 (minimal) | 100% | 1.5 | 67 |

The math behind the top item: Automated onboarding: (500 x 2 x 0.80) / 3 = 800 / 3 = 267. High reach, high impact on activation, and 80% confidence from 15 user interviews.

The Decision#

The team draws the capacity line at 12 person-months. Items 1-6 fit (cumulative: 3 + 1 + 2 + 2 + 2 + 2 = 12 months). Items 7 and 8 are deferred. The custom report builder scored low because 40% confidence crushed its score despite high impact. Dark mode had massive reach but minimal per-user impact (0.25), so even at 1.5 months of effort, it ranked 8th.

Key RICE lessons: Confidence is the tiebreaker -- two items with similar reach and impact diverge sharply at 90% versus 40% confidence, which pushes teams toward validating assumptions before committing resources. Effort creates non-obvious winners: bulk CSV import ranked second despite reaching only 50 users because the 1-month effort made the ratio extremely favorable.

MoSCoW Prioritization: Full Worked Example#

MoSCoW categorizes deliverables into four tiers: Must have, Should have, Could have, and Won't have (this time). Developed by Dai Clegg at Oracle in 1994, it has become standard in agile and project management for scope negotiations.

The Scenario#

A consulting team is scoping a 12-week CRM implementation for a mid-market financial services client. The client's stakeholders have submitted 15 requirements. The team has 480 person-hours of delivery capacity. The project sponsor needs a clear scope agreement before work begins.

The Categorization#

Must Have -- 270 hours, 56% of capacity:

| # | Requirement | Effort (hrs) | Rationale |

|---|---|---|---|

| 1 | Data migration from legacy system | 80 | No CRM without data. 12,000 accounts must transfer. |

| 2 | Sales pipeline with 5 custom stages | 60 | Core revenue workflow. Non-negotiable. |

| 3 | Role-based access control (4 roles) | 40 | Regulatory requirement. Compliance blocks launch without it. |

| 4 | Email integration (Outlook sync) | 50 | 200+ emails/day. Manual logging is not viable. |

| 5 | Basic reporting dashboard (3 reports) | 40 | Sponsor requires pipeline visibility from day one. |

Should Have -- 120 hours, 25% of capacity:

| # | Requirement | Effort (hrs) | Rationale |

|---|---|---|---|

| 6 | Automated lead scoring | 40 | 20% efficiency gain, but reps can qualify manually at launch. |

| 7 | Custom email templates (25) | 30 | Saves 15 min/day per rep, but manual compose works initially. |

| 8 | Document attachment per account | 25 | SharePoint covers this gap temporarily. |

| 9 | Mobile app configuration | 25 | 30% of reps are field-based, but tablets with browser work short-term. |

Could Have -- 90 hours, 19% of capacity:

| # | Requirement | Effort (hrs) | Rationale |

|---|---|---|---|

| 10 | Automated follow-up reminders | 20 | Calendar reminders substitute. |

| 11 | Client portal for document sharing | 30 | Marketing wants it, not part of core sales workflow. |

| 12 | Advanced analytics (cohort, win/loss) | 40 | Basic reports cover launch needs. |

Won't Have (this phase):

| # | Requirement | Rationale |

|---|---|---|

| 13 | AI-powered deal prediction | Needs 6+ months of pipeline data first. |

| 14 | Legacy billing integration | Billing team mid-migration; target unstable until Q3. |

| 15 | Multi-language support (4 languages) | Only 8% of accounts are non-English. Phase 2. |

The Scope Decision#

Musts consume 270 of 480 hours (56%), under the 60% ceiling. The team commits to all 5 Musts and 4 Shoulds (390 hours, 81% of capacity), leaving 90 hours as buffer. If delivery runs under 10% overrun, Could Haves enter in order, starting with follow-up reminders (20 hours).

The Won't list is documented in the statement of work. When a VP asks about AI deal prediction in week 6, the team points to the signed agreement.

Key MoSCoW lessons: The "Must" test is binary: "If we delivered everything except this, would the project still succeed?" If yes, it is not a Must regardless of who requested it. The Won't list is equally important -- it sets expectations upfront and prevents scope creep.

Continue reading: Agenda Slide PowerPoint · Flowchart in PowerPoint · Pitch Deck Guide

Free consulting slide templates

SWOT, competitive analysis, KPI dashboards, and more — ready-made PowerPoint templates built to consulting standards.

The Eisenhower Matrix: Quick Reference#

The Eisenhower Matrix sorts tasks along two dimensions: urgency (deadline within 48 hours) and importance (advances a stated goal). The result is four quadrants -- Do (urgent + important), Schedule (important, not urgent), Delegate (urgent, not important), and Eliminate (neither). It is the fastest prioritization method available: under 10 seconds per task once you have internalized the criteria.

For a deep dive with worked examples across industries, common mistakes, and team-level applications, see our Eisenhower Matrix Guide. For hands-on examples by role, see Eisenhower Matrix Examples.

Time-Blocking: Turning Ranked Lists into Action#

Time-blocking is not a ranking method -- it is the execution layer that makes any ranking system work. Instead of working through a to-do list reactively, you assign every task to a specific calendar slot.

How to apply it:

- Rank tasks using RICE, MoSCoW, or the Eisenhower Matrix

- Estimate duration for each task (add 30% buffer for the planning fallacy)

- Assign important work to your peak energy hours (typically morning)

- Batch low-value tasks into a single afternoon block

- Leave 20-30% of your calendar unscheduled for emergencies

When to use it: Always, regardless of which ranking method you choose. Time-blocking turns a prioritized list into an executable schedule. Treat time blocks as meetings with yourself that require the same cancellation threshold as a meeting with a client.

Choosing the Right Method for Your Context#

The right framework depends on three factors: team size, decision type, and time horizon. Here is when each method wins and when it falls short.

RICE Beats MoSCoW When...#

You have a large backlog (50+ items) and need a repeatable, quantitative ranking. MoSCoW groups items into 4 buckets but does not rank within a bucket. If you have 20 "Should Haves," MoSCoW gives no guidance on which to tackle first. RICE gives every item a numeric score, making the order unambiguous.

Best fit: Product teams with ongoing backlogs, cross-functional teams that need to agree on sequence, quarterly planning.

MoSCoW Beats RICE When...#

You need stakeholder alignment on scope rather than a ranked list. Telling a VP their request "scored 47 on RICE" invites argument about scoring. Telling them it is a "Should Have delivered after all Musts" is a conversation about sequencing, not scoring.

Best fit: Client-facing project scoping, fixed-timeline engagements, non-technical stakeholder negotiations.

The Eisenhower Matrix Beats Both When...#

You need speed over precision. Sorting 30 tasks in an Eisenhower triage takes 5 minutes. Scoring 30 items on RICE takes 60-90 minutes. The Eisenhower Matrix also handles urgency, which neither RICE nor MoSCoW addresses.

Best fit: Individual task management, weekly team triage, any situation where you need to decide what to do today rather than what to build this quarter.

Combining Methods#

In practice, teams layer methods. Use the Eisenhower Matrix for daily decisions. Use MoSCoW for project scope negotiations with stakeholders. Use RICE for quarterly backlog grooming. Time-blocking turns any of these into an executable schedule. A decision matrix can help when choices involve five or more evaluation criteria. For visual layouts to present these trade-offs, the prioritization matrix template and impact-effort matrix provide ready-to-use slides.

A Real-World Weekly Workflow#

Here is how a product manager at a 40-person SaaS company actually applies these methods across a typical week.

Monday Morning Triage (15 minutes)#

9:00 AM. Eisenhower sort of 22 accumulated items. Result: 4 in Q1, 7 in Q2, 8 in Q3, 3 in Q4. Cross out the Q4 items immediately -- a stakeholder report nobody opened in 6 weeks, a follow-up on a churned account's feature request, and a recurring meeting with no agenda. Delegate Q3: assign 3 bug triage tasks to the junior PM, batch 5 routine Slack responses into a 30-minute afternoon block. Time-block Q1 and Q2 into the calendar. The highest-impact Q2 task -- the quarterly roadmap proposal -- gets the 9:30-11:00 slot on Tuesday and Thursday mornings.

Wednesday Sprint Planning (45 minutes)#

The team reviews the RICE-scored backlog. 14 items scored, 40 story points of capacity. Items enter in RICE order: the top 6 (38 points) make it in. Item 7 (dashboard enhancement, RICE 58) misses by 2 points. A stakeholder requests adding a "quick fix" mid-meeting. The PM asks: "What is its RICE score?" Team estimates: Reach 30, Impact 1, Confidence 60%, Effort 0.5 months -- RICE score 36. It ranks 9th. It waits.

Thursday Scope Review with Client (30 minutes)#

The client wants 3 new features added. The PM pulls up the MoSCoW board. Current Musts: 58% of capacity. One new feature qualifies as a Should (event tracking, 20 hours, supports a stated KPI), one is a Could (custom color theming, 8 hours, cosmetic), one is a Won't (multi-tenant white labeling, 80 hours, would delay launch 3 weeks). The client agrees because trade-offs are visible on one slide.

Friday Review (5 minutes)#

Time split this week: 68% on Q1+Q2, up from 55% three weeks ago. The improvement came from eliminating 3 Q4 tasks and batching Q3 into two 30-minute blocks instead of letting it interrupt throughout the day.

Handling Conflicting Priorities from Multiple Stakeholders#

The hardest prioritization problem is not ranking tasks. It is managing three stakeholders who each believe their request is the top priority.

Make the trade-off visible: list all competing requests with effort estimates, show total capacity (if you have 40 hours and requests total 80, the math speaks for itself), and ask stakeholders to stack-rank using MoSCoW language. This shifts the conversation from "why isn't my request being done?" to "given limited capacity, what should we do first?" A decision matrix can help structure multi-criteria evaluations.

When stakeholders cannot agree, document the conflict, escalate to the shared manager with a recommendation, and accept the decision. The worst outcome is silently trying to do both and delivering neither well.

When presenting trade-offs to leadership, a prioritization matrix or impact-effort matrix on a single slide communicates more than a spreadsheet. Deckary's slide library includes pre-built matrix layouts with alignment shortcuts for precise positioning.

Summary#

Learning how to prioritize tasks is a skill that compounds. The three core methods address different levels of complexity:

- RICE scoring -- quantitative ranking for large backlogs. Use when you need a numeric order across 50+ items and a defensible rationale for every ranking decision.

- MoSCoW -- scope negotiation language for stakeholder alignment. Use when the question is "what is in and what is out" rather than "what is the order."

- Eisenhower Matrix -- fast daily sorting by urgency and importance. Use for personal and team triage when speed matters more than precision. (Full guide)

- Time-blocking -- turns any ranked list into an executable schedule. Use always, regardless of ranking method.

The weekly rhythm: Monday morning Eisenhower triage for the week's tasks. Wednesday sprint planning with RICE-scored backlogs. Thursday scope reviews with MoSCoW for client negotiations. Friday review of Q1+Q2 time percentage. Teams that adopt this rhythm consistently shift from 35-40% strategic work to 65%+ within three weeks.

Explore the prioritization matrix template for a ready-to-use slide layout, or browse the full strategic frameworks collection for related decision-making tools including the Eisenhower Matrix template and impact-effort matrix.

Build consulting slides in seconds

Describe what you need. AI generates structured, polished slides — charts and visuals included.

Try Free