Root Cause Analysis Examples: 5 Worked Cases From Problem to Fix

Root cause analysis examples across IT, manufacturing, customer churn, project overruns, and turnover. Full walkthroughs with 5 Whys, fishbone, and fault tree.

Root cause analysis examples are everywhere in consulting and operations, but most guides stop at the fishbone diagram and call it done. As the American Society for Quality's RCA body of knowledge makes clear, drawing a diagram is not root cause analysis. RCA is the full process: defining the problem precisely, collecting data, generating hypotheses, verifying the actual root cause, and implementing corrective action that prevents recurrence.

After applying root cause analysis across 60+ operations, IT, and organizational engagements, we have found that teams fail not because they lack tools but because they skip steps. They brainstorm causes on a whiteboard, pick the most plausible one, and implement a fix without ever verifying with data. Six months later, the same problem returns.

This guide walks through five complete root cause analysis examples across different domains, covers the four major RCA methods with a comparison table, and identifies the mistakes that turn a rigorous diagnostic process into guesswork. For the broader framework context, see our Strategic Frameworks Guide.

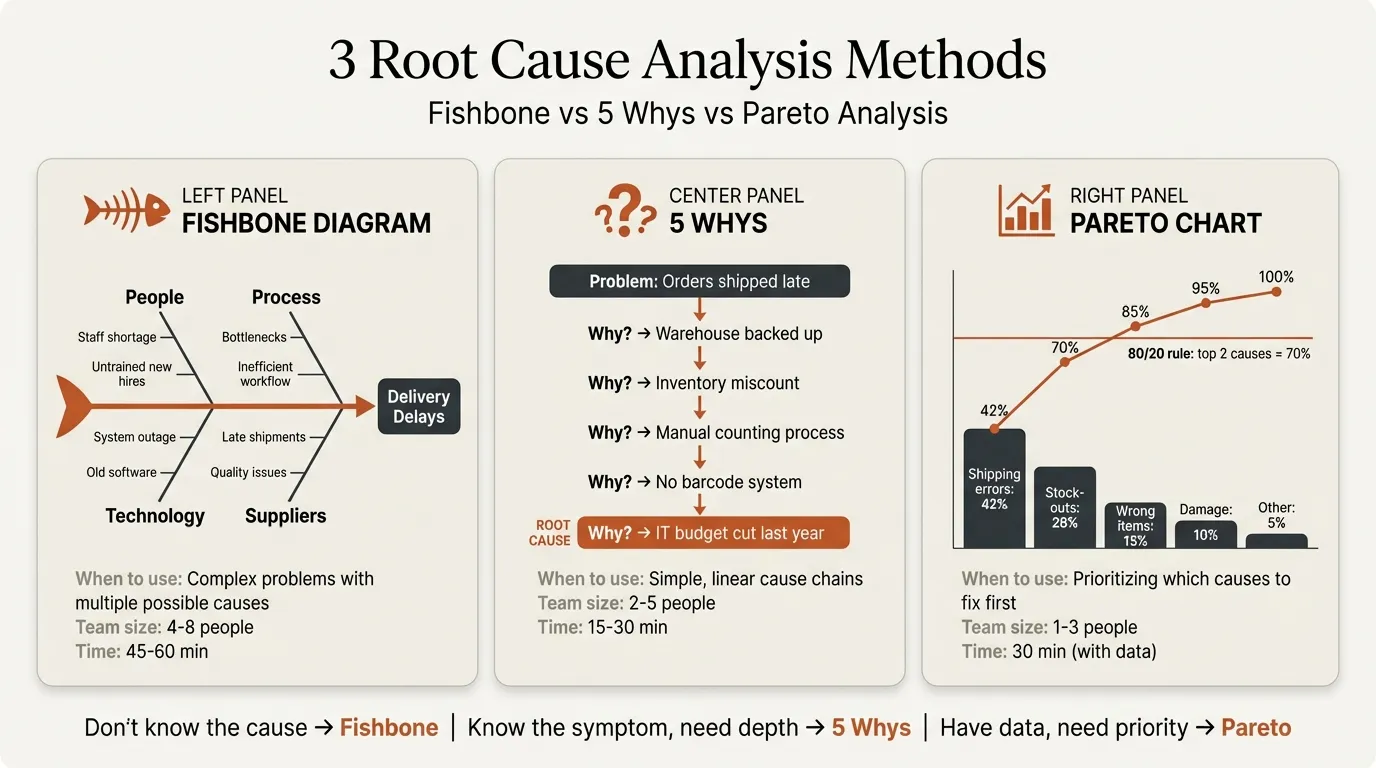

Root Cause Analysis Methods Compared#

Before working through examples, here is how the four primary RCA methods compare. Most investigations use at least two in combination.

| Method | Best For | Complexity | Time Required | Output |

|---|---|---|---|---|

| 5 Whys | Simple causal chains with a single thread | Low | 15-30 min | Linear cause chain |

| Fishbone (Ishikawa) diagram | Problems with multiple potential cause categories | Medium | 1-2 hours | Categorized cause map |

| Fault tree analysis | System failures with Boolean logic (AND/OR gates) | High | 4-8 hours | Probability-weighted failure tree |

| Pareto analysis | Prioritizing which causes drive the most impact | Medium | 1-3 hours | Ranked cause-impact chart |

5 Whys works best when you suspect a straightforward causal chain. Ask "why?" iteratively until you reach a cause you can act on. The risk: it follows a single thread and can miss parallel causes. For more depth, see our 5 Whys Examples.

Fishbone diagrams organize potential causes into categories (People, Process, Equipment, Materials, Environment, Management). They are brainstorming tools, not diagnostic tools -- you still need data to confirm which causes are real. See our Fishbone Diagram Examples for detailed walkthroughs.

Fault tree analysis (FTA), a method developed for reliability engineering in the aerospace industry, models failure paths using AND/OR logic gates. An AND gate means all sub-causes must occur for the failure. An OR gate means any one sub-cause is sufficient. FTA is the most rigorous method but requires probability estimates for each event.

Pareto analysis ranks causes by impact. When 15 things could be causing the problem, Pareto tells you which 3 account for 80% of the damage. It does not tell you why those causes exist -- you still need 5 Whys or fishbone for that.

The 5-Step RCA Process#

Every root cause analysis example in this guide follows the same five-step structure. Skipping any step is where most teams go wrong.

- Define the problem statement. Specific, measurable, bounded by time and scope. "Quality is bad" is not a problem statement. "Defect rate on Product Line A increased from 1.2% to 3.8% between January and March 2026" is.

- Collect data. Incident logs, process metrics, timelines, interviews. Data collected before brainstorming, not after.

- Identify potential causes. Use fishbone diagrams, 5 Whys, or fault trees to generate hypotheses. This is brainstorming -- you are not committing to a cause yet.

- Verify the root cause. Test your hypothesis with data. Does removing this cause eliminate the problem? Does the data show a causal relationship, not just correlation?

- Implement corrective action and monitor. Fix the root cause, not the symptom. Track the metric to confirm the problem does not recur.

Root Cause Analysis Example 1: IT Server Downtime#

Problem statement: Production server outages occurred 4 times in 8 weeks, averaging 2.3 hours per incident. Total business impact: $184K in lost revenue and 12 SLA breaches.

Data collected: Incident logs, server monitoring data, change management records, on-call response timelines.

Cause identification (5 Whys):

- Why did the server go down? Memory utilization hit 100% and the application crashed.

- Why did memory hit 100%? A memory leak in the latest application release accumulated over 5-7 days.

- Why was the memory leak not caught before deployment? The staging environment runs for only 2 hours during load testing, not long enough to surface leaks.

- Why is load testing limited to 2 hours? The testing budget was cut last quarter, and extended soak tests were deprioritized.

- Why were soak tests deprioritized? No documented requirement for soak testing in the deployment checklist; the decision was left to individual engineers.

Root cause: Missing soak test requirement in the deployment process. The memory leak was the proximate cause, but without a process requiring extended testing, similar leaks will ship again.

Verification: Reviewed the last 12 months of incidents. Three of the four outages traced to issues that would have surfaced in a 24-hour soak test. The fourth was an unrelated network failure.

Corrective action: Added mandatory 24-hour soak test to the deployment checklist. Set automated memory threshold alerts at 80% utilization. Result: zero memory-related outages in the following 16 weeks.

Root Cause Analysis Example 2: Manufacturing Defect Rate Spike#

Problem statement: Defect rate on Product Line A increased from 1.2% to 3.8% over Q1 2026, resulting in $290K in scrap costs and 3 customer complaints.

Data collected: Defect logs by shift, machine, operator, and material batch. Process parameter trends (temperature, pressure, cycle time). Maintenance records.

Cause identification (Fishbone diagram):

Categories explored:

- Equipment: Machine 3 calibration drift detected -- last calibrated 47 days ago vs. 30-day requirement

- Materials: New resin supplier introduced in January -- batch testing showed 12% higher moisture content

- People: Two new operators started in December -- defect rates 2.1x higher during their shifts

- Process: No incoming material inspection for moisture content

- Environment: Ambient humidity 8% higher than spec during February (facility HVAC issue)

Pareto analysis of causes:

| Cause | % of Total Defects | Cumulative % |

|---|---|---|

| High moisture content in new resin | 42% | 42% |

| Machine 3 calibration drift | 28% | 70% |

| New operator error rate | 18% | 88% |

| Humidity excursion | 12% | 100% |

Root cause verification: The resin moisture issue and calibration drift together accounted for 70% of defects. When we isolated production runs that used the old supplier's resin on properly calibrated machines, defect rates were 1.1% -- consistent with the historical baseline. The new operators and humidity were contributing factors, not root causes.

Corrective actions:

- Implemented incoming moisture testing for all resin batches (reject threshold: 0.3%)

- Reset Machine 3 calibration schedule to biweekly with automated alerts

- Added structured training program for new operators with 4-week supervised ramp

Result: defect rate returned to 1.3% within 6 weeks.

Continue reading: Agenda Slide PowerPoint · Flowchart in PowerPoint · Pitch Deck Guide

Free consulting slide templates

SWOT, competitive analysis, KPI dashboards, and more — ready-made PowerPoint templates built to consulting standards.

Root Cause Analysis Example 3: Customer Satisfaction Decline#

Problem statement: NPS dropped from 52 to 34 over two quarters for a B2B SaaS platform serving 1,200 mid-market accounts. Churn risk flagged by the customer success team.

Data collected: NPS survey verbatims (800+ responses), support ticket volume and resolution times, feature usage analytics, customer health scores, renewal data.

Cause identification (Fishbone + Pareto):

Fishbone categories:

- Product: Three major features launched in Q3-Q4 with reported bugs; usage of core reporting module dropped 23%

- Support: Average first-response time increased from 4 hours to 11 hours; headcount flat while ticket volume grew 40%

- Onboarding: New self-serve onboarding flow launched in Q3; 30-day activation rate dropped from 68% to 51%

- Pricing: Mid-cycle price increase of 15% announced in October with 30-day notice

Pareto of NPS detractor themes from verbatims:

| Theme | % of Detractor Comments |

|---|---|

| Slow support response times | 38% |

| Bugs in recent feature releases | 27% |

| Poor onboarding experience | 20% |

| Price increase without added value | 15% |

Root cause verification: Segmented NPS by cohort. Customers who never contacted support had an NPS of 48. Customers with 2+ tickets in the past quarter had an NPS of 19. The support bottleneck was the primary driver, amplified by the increase in bug-related tickets from rushed releases.

The deeper root cause: engineering released three major features in a compressed timeline to meet a board-level OKR, which generated a spike in bug reports that overwhelmed a support team that had not been staffed for the increased volume.

Corrective actions:

- Hired 4 additional support specialists with 2-week onboarding ramp

- Implemented release quality gates requiring less than 2% critical bug rate before GA

- Reverted onboarding flow for accounts below health score threshold, assigned CSM-assisted onboarding

Result: NPS recovered to 45 within one quarter. Support first-response time dropped to 5 hours.

Root Cause Analysis Example 4: Project Cost Overrun#

Problem statement: A system integration project budgeted at $2.4M delivered at $3.7M -- a 54% overrun. Timeline extended from 9 months to 14 months.

Data collected: Project budget vs. actuals by work package, change request log, resource utilization reports, milestone tracking, stakeholder interview transcripts.

Cause identification (5 Whys + Fault Tree):

5 Whys thread:

- Why did costs overrun by $1.3M? Scope expanded by 35% through 22 change requests after project kickoff.

- Why were 22 change requests submitted? Requirements were documented at a high level with significant ambiguity in 7 of 12 work packages.

- Why were requirements ambiguous? The discovery phase was compressed from 6 weeks to 3 weeks to meet the client's requested start date.

- Why was discovery compressed? The sales team committed to a start date before the delivery team completed scoping.

- Why did sales commit before scoping was complete? No formal handoff gate requiring signed-off scope before contract execution.

Fault tree (simplified):

Cost Overrun (top event) = Scope Creep (OR) + Resource Inefficiency (OR) + Vendor Delays

- Scope Creep = Ambiguous Requirements (AND) + No Change Control Threshold

- Ambiguous Requirements = Compressed Discovery (AND) + Missing Stakeholder Sign-off

- Resource Inefficiency = Rework from Scope Changes (AND) + Staff Turnover Mid-Project

Root cause verification: Analyzed the 22 change requests. 18 of 22 traced to requirements gaps in the 7 ambiguous work packages. The remaining 4 were genuine scope additions requested by the client. Without the 18 requirements-driven changes, estimated cost would have been $2.6M -- within the 10% contingency.

Corrective actions:

- Implemented mandatory discovery phase minimum (4 weeks) with formal sign-off gate before contract execution

- Added change control threshold: any change request exceeding 5% of remaining budget requires steering committee approval

- Created requirements completeness checklist with 15 verification criteria

Result: next three projects of similar size delivered within 8% of budget.

Root Cause Analysis Example 5: Employee Turnover Spike#

Problem statement: Voluntary turnover in the engineering department increased from 12% to 24% annualized over two quarters. Cost of replacement estimated at $45K per engineer (recruiting, onboarding, lost productivity).

Data collected: Exit interview transcripts (14 departures), employee engagement survey results, compensation benchmarking data, promotion history, manager effectiveness scores, Glassdoor reviews.

Cause identification (Fishbone):

- Compensation: Base pay 8% below market median for the region; no equity refresh program

- Management: Two engineering managers scored below 3.0/5.0 on quarterly engagement surveys (team average: 3.8)

- Career growth: Zero promotions in the engineering department over the past 12 months; no published career ladder

- Workload: Average weekly hours self-reported at 52; three consecutive quarters of "crunch" shipping cycles

- Culture: Remote work policy reversed in Q2 with mandatory 4-day in-office requirement

Pareto of exit interview reasons:

| Reason Cited | % of Departing Engineers |

|---|---|

| Lack of career progression | 43% |

| Below-market compensation | 29% |

| Manager relationship | 21% |

| Return-to-office mandate | 7% |

Root cause verification: Cross-referenced departures with manager assignments. 9 of 14 departures came from the two lowest-scoring managers' teams. However, even on high-scoring manager teams, engineers cited lack of promotion opportunities. We ran a regression on engagement scores: career progression clarity was the strongest predictor of intent to stay (r = 0.71), followed by compensation satisfaction (r = 0.58).

Root cause: The absence of a career ladder and promotion framework was the primary driver. Engineers with 2+ years of tenure had no visibility into what was required for advancement. Compensation was a compounding factor, not the root cause -- several departing engineers accepted lateral moves at similar pay with clearer growth paths.

Corrective actions:

- Published engineering career ladder with 6 levels and explicit promotion criteria within 6 weeks

- Conducted market compensation adjustment (average 11% increase for engineers below 25th percentile)

- Placed the two lowest-scoring managers in a structured coaching program with 90-day improvement plans

- Committed to quarterly promotion cycles with transparent review process

Result: annualized turnover dropped to 14% over the following two quarters. Offer acceptance rate for new hires increased from 62% to 78%.

Common Root Cause Analysis Mistakes#

After reviewing dozens of RCA reports, these are the patterns that consistently undermine the process:

Stopping at symptoms. "The server crashed because memory hit 100%" is a symptom, not a root cause. Ask why memory hit 100%, why that was not caught, and why the process allowed it. Keep drilling until you reach something you can change structurally.

Jumping to solutions before completing analysis. Teams often identify a plausible cause in the first 15 minutes and spend the remaining time designing the fix. If you have not verified with data, you are guessing. The manufacturing example above could have led to blaming new operators -- a plausible but incorrect root cause that would have left 70% of defects unaddressed.

Not verifying root causes with data. A root cause is verified when removing it eliminates the problem and reintroducing it recreates it. If you cannot test directly, use historical data: isolate periods or segments where the suspected cause was absent and check whether the problem still occurred.

Anchoring on a single cause. Most business problems have multiple contributing factors. The customer satisfaction example had four distinct drivers. Pareto analysis helps you prioritize, but fixing only the top cause may leave 60% of the problem in place.

Confusing correlation with causation. The employee turnover example showed that the return-to-office mandate coincided with the turnover spike, but data showed it was a minor factor. Without regression analysis, a team might have reversed the RTO policy and still faced 20%+ turnover.

When to Use Each RCA Method#

Choose your method based on problem characteristics:

- Single, linear causal chain: Start with 5 Whys. Fast, low overhead, effective for straightforward problems.

- Multiple potential cause categories: Use a fishbone diagram to organize brainstorming, then verify with data.

- System failure with interdependencies: Use fault tree analysis when failures require specific combinations of sub-causes (AND/OR logic).

- Too many potential causes to investigate all: Use Pareto analysis to rank causes by impact and focus resources on the vital few.

- Complex, high-stakes problems: Combine methods. Start with fishbone to brainstorm, apply Pareto to prioritize, then use 5 Whys to drill into the top causes.

For a ready-to-use framework that walks your team through this process step by step, see our Root Cause Analysis Template. For related analytical frameworks, explore our guides on Decision Tree Examples and the broader Strategic Frameworks Guide.

Key Takeaways#

- Root cause analysis is a five-step process, not a single tool. Defining the problem, collecting data, and verifying causes are as important as the brainstorming step.

- The 5 Whys, fishbone diagrams, fault tree analysis, and Pareto analysis each solve different parts of the problem. Combine methods for complex investigations.

- Every root cause must be verified with data. If removing the suspected cause does not eliminate the problem, you found a contributing factor, not the root cause.

- The most common failure mode is stopping too early -- treating symptoms or implementing fixes without verification. Rigorous RCA takes longer upfront but eliminates the cost of repeated failures.

- Document your RCA process and findings. The analysis is as valuable as the fix because it builds organizational capability for the next problem.

Build consulting slides in seconds

Describe what you need. AI generates structured, polished slides — charts and visuals included.

Try Free