Fishbone Diagram Examples: 5 Worked Cause-and-Effect Models

Fishbone diagram examples for manufacturing, software, churn, project delays, and service quality. Step-by-step Ishikawa method with full category breakdowns.

Fishbone diagram examples appear in every root cause analysis playbook, but most versions online stop at labeling six empty branches and calling it a framework. That is a brainstorming template, not an analysis.

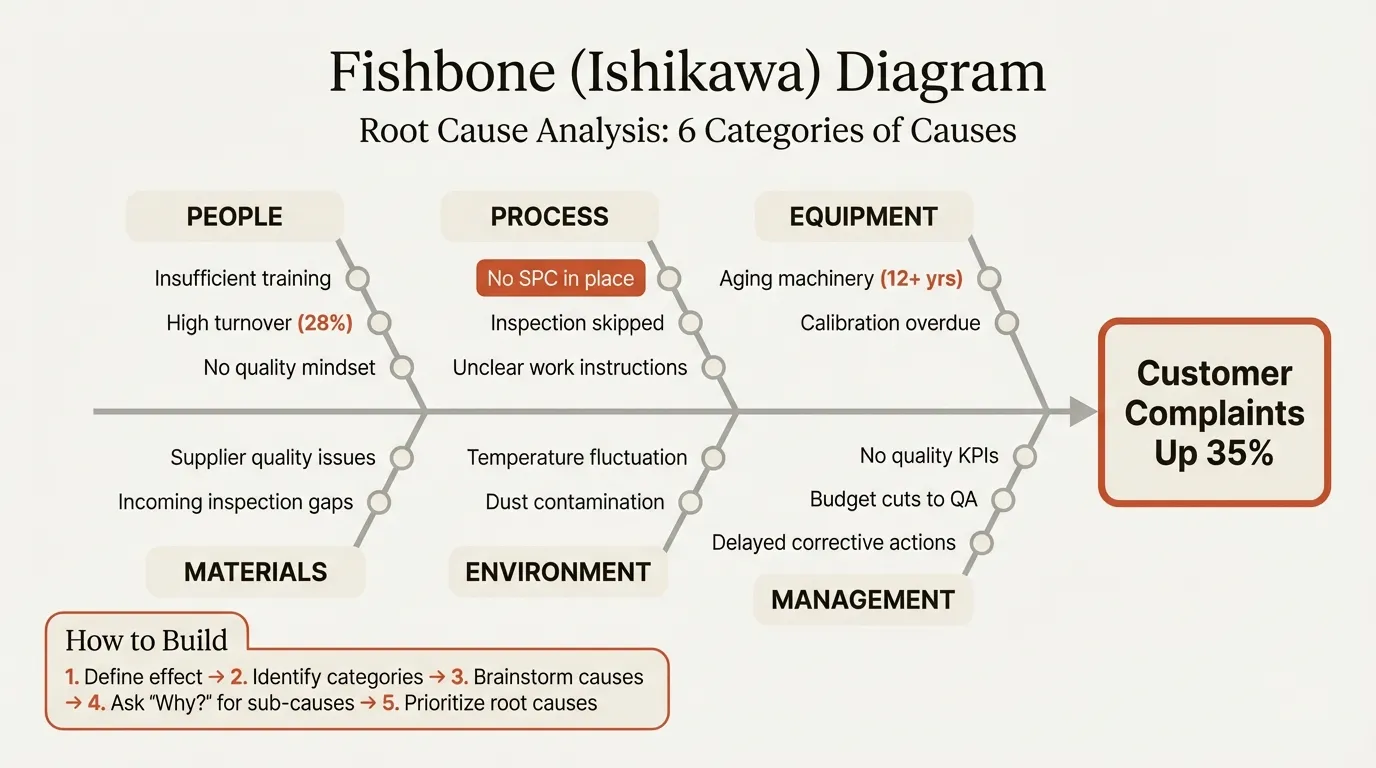

A useful fishbone diagram -- also called an Ishikawa or cause-and-effect diagram, originally developed by Kaoru Ishikawa at the University of Tokyo in the 1960s -- maps specific, testable causes across structured categories, then prioritizes which ones to verify with data. The diagram is the hypothesis generation step, not the conclusion.

After applying fishbone diagrams across 60+ operational improvement, quality, and due diligence engagements, we have found that the difference between productive and wasteful root cause sessions comes down to three things: a tightly scoped problem statement, category adaptation for the context, and disciplined verification after brainstorming. This guide walks through five worked fishbone diagram examples across manufacturing, software, customer churn, project delays, and service quality, then covers how to build one step by step and when to use alternatives. For the broader toolkit, see our Strategic Frameworks Guide.

How to Build a Fishbone Diagram: Step-by-Step#

Before diving into examples, here is the six-step process we use in practice. Each example below follows this method.

Step 1: Define the problem statement. Write it as a specific, measurable effect. "Defect rate increased from 1.2% to 3.8% in Q3" works. "Quality is bad" does not. The problem goes at the head of the fish.

Step 2: Select cause categories. For manufacturing, use the 6Ms (Man, Machine, Method, Material, Measurement, Mother Nature). For service or software contexts, adapt to People, Process, Technology, Policy, Environment, and Measurement. Choose 4-6 categories relevant to the problem.

Step 3: Brainstorm causes within each category. Work one category at a time. Ask "what within [category] could cause [problem]?" for each branch. Aim for 3-5 specific causes per category.

Step 4: Add sub-causes. For each primary cause, ask "why does this happen?" to add one level of depth. This connects the fishbone to 5 Whys thinking without going five levels deep on every branch.

Step 5: Prioritize. Mark the 3-5 most likely root causes using dot voting or impact-likelihood scoring. Not every bone deserves investigation -- focus on the causes with both high probability and high impact.

Step 6: Verify with data. Collect evidence for each prioritized cause. Run a controlled test, pull historical data, or conduct interviews. Remove causes that the data does not support. The remaining verified causes become your action plan.

5 Fishbone Diagram Examples Across Industries#

Example 1: Manufacturing Defect (6M Categories)#

Problem statement: Injection molding defect rate increased from 1.2% to 3.8% over 8 weeks at Plant B.

| Category | Primary Causes | Sub-Causes |

|---|---|---|

| Man | New operator cohort (4 of 12 hired in last 90 days) | Training program compressed from 3 weeks to 1 week due to staffing urgency |

| Machine | Mold temperature inconsistency on Lines 3 and 7 | Thermocouple calibration overdue by 6 weeks; PM schedule missed during shift change |

| Method | Quality gate at Stage 3 removed during process redesign | Redesign team assumed automated vision system covered the gap; system only catches surface defects |

| Material | Resin batch variability from new supplier (switched in Q2) | Incoming material inspection reduced from 100% to sample-based after supplier certification |

| Measurement | SPC charts not reviewed until end of shift | Real-time alerting disabled after false positive complaints in Q1 |

| Mother Nature | Ambient humidity spike during July-August | Plant B HVAC system operates at 60% capacity in summer; dehumidifiers not installed on production floor |

Verified root causes: Thermocouple calibration drift on Lines 3 and 7 correlated with 68% of defects. Resin supplier batch variability accounted for 22%. The compressed training program was not independently causal -- experienced operators on the same lines had similar defect rates during drift periods.

Key insight: The team's instinct was to blame new operators. The fishbone redirected investigation toward equipment and materials, where data confirmed the actual causes.

Example 2: Software Bug (Adapted Categories)#

Problem statement: Payment processing failure rate increased from 0.3% to 2.1% after the v4.2 release, affecting 1,400 transactions per day.

| Category | Primary Causes | Sub-Causes |

|---|---|---|

| Code | Race condition in concurrent payment session handling | New async handler introduced in v4.2 lacks mutex on shared transaction state |

| Code | Timeout threshold reduced from 30s to 10s in payment gateway call | Change made to improve UX responsiveness; edge cases with slow bank APIs not tested |

| Infrastructure | Database connection pool exhaustion during peak hours (2-4 PM) | Pool size unchanged since v3.0 when traffic was 40% lower |

| Process | Integration test suite does not cover concurrent payment scenarios | Test coverage focused on single-session flows; no load testing in CI/CD pipeline |

| Data | Currency conversion rounding errors on JPY and KRW transactions | Floating-point arithmetic applied to zero-decimal currencies after library update |

| Third Party | Payment gateway provider migrated API version without advance notice | Deprecated response field used for idempotency check returns null in new version |

Prioritized root causes (verified): The race condition accounted for 74% of failures, confirmed by reproducing under load test. The currency rounding error caused 18% of failures, isolated to JPY/KRW transactions. The third-party API migration caused 8% -- the null idempotency field triggered retry loops that compounded the race condition.

Key insight: Software fishbone diagrams work best with adapted categories: Code, Infrastructure, Process, Data, Third Party, and Environment. The standard 6Ms do not map well to engineering problems. Notice that two causes interacted -- the API migration amplified the race condition -- which a simple 5 Whys chain would have missed.

Example 3: Customer Churn (Service/SaaS Context)#

Problem statement: Monthly churn rate for mid-market accounts increased from 2.8% to 4.6% over two quarters, representing $1.2M in lost ARR.

| Category | Primary Causes | Sub-Causes |

|---|---|---|

| People | CSM-to-account ratio degraded from 1:25 to 1:45 after hiring freeze | QBRs dropped from quarterly to semi-annual; at-risk signals missed for 30+ accounts |

| Product | Core workflow feature (bulk editing) broken for 6 weeks after v8 release | Engineering prioritized new feature development over bug backlog |

| Process | No structured onboarding for accounts migrated to new pricing tier | Migrated accounts received no training on new features included in tier; perceived as price increase with no added value |

| Pricing | 22% price increase applied uniformly without segmentation | High-usage accounts saw reasonable per-unit cost; low-usage accounts saw 40%+ effective increase |

| Competition | Two competitors launched free tiers targeting mid-market in Q1 | Sales team not equipped with competitive battle cards; no retention offers authorized |

| Measurement | Health score model not updated after product changes in v8 | Usage metrics weighted toward features deprecated in v8; healthy accounts flagged at-risk and vice versa |

Prioritized root causes (verified): Exit surveys and win/loss interviews attributed 41% of churned revenue to the pricing increase without segmentation, 28% to the broken bulk editing feature, and 19% to competitive displacement. The CSM ratio degradation was an amplifier -- accounts with regular QBR contact churned at 2.1% even with the price increase, versus 5.9% without.

Key insight: Churn fishbone diagrams require cross-functional categories because churn is never single-cause. The pricing and product causes were both necessary conditions -- fixing either one alone would have reduced churn by approximately 30%, but addressing both together recovered an estimated 65%. This is the kind of multi-cause interaction that decision trees alone cannot capture.

Example 4: Project Delay#

Problem statement: ERP implementation delivered 14 weeks behind schedule, exceeding budget by $2.4M.

| Category | Primary Causes | Sub-Causes |

|---|---|---|

| People | Key solution architect left at week 8; replacement took 5 weeks to onboard | No knowledge transfer documentation; architecture decisions stored in personal notes |

| Process | Scope changes approved without timeline adjustment (7 CRs in 12 weeks) | Change control board met monthly; approvals treated as additive without trade-off analysis |

| Technology | Legacy data migration took 3x estimated time | Source system had 14 years of undocumented schema changes; data mapping assumed clean schemas |

| Stakeholders | Business unit leads unavailable for UAT during Q4 close | UAT scheduled during busiest financial period; no backup testers identified |

| Vendor | Integration partner delivered custom modules 4 weeks late | Partner staffed project with junior developers after proposal stage; no SLA enforcement |

| Requirements | 23% of requirements changed or added after design freeze | Design freeze treated as guideline rather than gate; no formal sign-off process |

Prioritized root causes (verified): Scope creep without timeline adjustment (7 CRs with no schedule impact assessment) accounted for 6 of the 14 weeks of delay. Legacy data migration complexity added 5 weeks. The architect departure added 3 weeks of rework. Vendor delays overlapped with migration work and did not independently extend the timeline.

Key insight: Project delay fishbone diagrams almost always reveal that no single cause explains the full delay. The causes overlap temporally. The fishbone's value here was separating independent delays (scope creep) from concurrent ones (vendor delays that overlapped with migration) to accurately attribute weeks and avoid double-counting.

Example 5: Service Quality Issue (Healthcare)#

Problem statement: Patient satisfaction scores dropped from 4.2 to 3.4 (out of 5) in the outpatient clinic over one quarter.

| Category | Primary Causes | Sub-Causes |

|---|---|---|

| People | 3 of 8 experienced nurses transferred to inpatient unit | Replacements are recent graduates with limited outpatient triage experience |

| Process | Average wait time increased from 18 to 42 minutes | Scheduling system overbooking rate increased from 5% to 15% after policy change to reduce no-shows |

| Technology | New EHR system requires 3x more clicks per patient encounter | Physicians spending 40% of visit time on screen instead of patient interaction |

| Environment | Waiting area reduced by 30% during renovation | Patients standing or sitting in hallways; perceived crowding amplifies wait time frustration |

| Policy | Insurance verification moved from pre-visit to check-in | Adds 8-12 minutes to check-in; creates bottleneck at reception |

| Measurement | Satisfaction surveys moved from in-clinic to post-visit email | Response rate dropped from 65% to 22%; respondents skew toward dissatisfied patients |

Prioritized root causes (verified): Wait time increase correlated most strongly with satisfaction decline (r = -0.82). The overbooking policy and insurance verification change together explained the wait time spike. The EHR system usability issue independently reduced satisfaction -- patients with experienced physicians (who adapted faster to the new system) reported 0.4 points higher satisfaction despite identical wait times.

Key insight: The measurement category revealed a confound: the survey method change inflated the apparent decline. Actual satisfaction dropped from approximately 4.2 to 3.7, not 3.4. Without the fishbone forcing examination of the Measurement category, the team would have overestimated the problem severity and potentially over-invested in remediation.

Continue reading: Agenda Slide PowerPoint · Flowchart in PowerPoint · Pitch Deck Guide

Free consulting slide templates

SWOT, competitive analysis, KPI dashboards, and more — ready-made PowerPoint templates built to consulting standards.

Fishbone Diagram Examples: Comparing Root Cause Analysis Methods#

Fishbone diagrams are one of several root cause analysis tools. Choosing the right one depends on the problem structure.

| Method | Best For | Strengths | Limitations |

|---|---|---|---|

| Fishbone (Ishikawa) | Multi-cause problems across categories | Forces exhaustive, structured brainstorming; visual and collaborative | Does not quantify cause likelihood; requires separate verification step |

| 5 Whys | Single causal chains; isolated incidents | Simple, fast, no training required | Stops at one chain; misses parallel causes; depends on who is asking |

| Fault Tree Analysis | Safety-critical and engineering failures | Quantitative; models AND/OR cause combinations | Requires probability data; time-intensive for complex systems |

| Pareto Analysis | Prioritizing among known causes | Data-driven; identifies the vital few | Requires existing defect data; does not discover new causes |

When to combine methods: As ASQ's root cause analysis body of knowledge recommends, start with a fishbone to generate the full cause landscape. Use 5 Whys to drill into each prioritized branch. Apply Pareto analysis to rank verified causes by frequency or impact. This three-step sequence -- brainstorm, drill, prioritize -- is the most effective root cause analysis workflow we have seen across engagements.

For a ready-to-use starting point, see the Root Cause Analysis Template.

Common Fishbone Diagram Mistakes#

Too many branches. Fishbone diagrams with 40+ causes across 8 categories become unreadable and unprioritizable. If you have more than 30 causes, the problem statement is too broad. Split it into sub-problems and run separate fishbone sessions for each.

Vague causes. "Communication issues" and "lack of training" are symptoms, not root causes. Push for specificity: "New hires receive no documentation on the legacy data schema" is actionable. "Training is insufficient" is not. Every cause should answer: who, what, when, and how much.

Skipping verification. The fishbone produces hypotheses, not conclusions. We have seen teams implement solutions for all 15 brainstormed causes without testing any of them. In every example above, data verification eliminated 50-70% of initial hypotheses. Budget your time as 30% brainstorming, 70% verification.

Using the wrong categories. The 6Ms work for manufacturing. They do not work for software, service, or strategy problems. Adapt categories to your context. A fishbone diagram for customer churn needs People, Product, Process, Pricing, Competition, and Measurement -- not Man, Machine, Material.

Stopping at the first level. A fishbone with only primary causes (one level of branches) rarely reaches root causes. Add at least one sub-cause level by asking "why does this cause exist?" for each primary branch. The sub-cause is usually where the actionable fix lives.

Key Takeaways#

Fishbone diagrams are hypothesis generation tools, not answer generators. Their value is in forcing structured, exhaustive thinking across categories before the team converges on a solution -- which prevents the common failure of fixing the most obvious cause while the actual root cause persists.

- Scope the problem tightly -- a measurable problem statement prevents the diagram from becoming an everything-is-wrong brainstorm

- Adapt categories to context -- use 6Ms for manufacturing, adapted categories (People, Process, Technology, Data, Policy) for service and software

- Prioritize before acting -- mark the 3-5 most likely causes and verify with data before implementing fixes

- Combine with other methods -- use fishbone for breadth, 5 Whys for depth, and Pareto for prioritization

- Keep it readable -- 4-6 categories, 3-5 causes per category, one level of sub-causes

For related frameworks, see our SWOT Analysis Examples and Decision Tree Examples. For the full consulting frameworks toolkit, explore the Strategic Frameworks Guide.

Build consulting slides in seconds

Describe what you need. AI generates structured, polished slides — charts and visuals included.

Try Free