Change Management Models: 6 Proven Approaches Compared

Compare 6 change management models used in consulting and corporate transformations. Includes selection criteria, comparison table, and common mistakes.

Change management models are only as useful as the specificity with which they are applied. A textbook summary of Kotter's 8 steps tells you what to do in theory. A case study of those 8 steps in a $400M post-merger integration tells you what each step actually looks like when 2,300 employees are watching, customers are defecting, and the board is demanding synergy numbers by Q3.

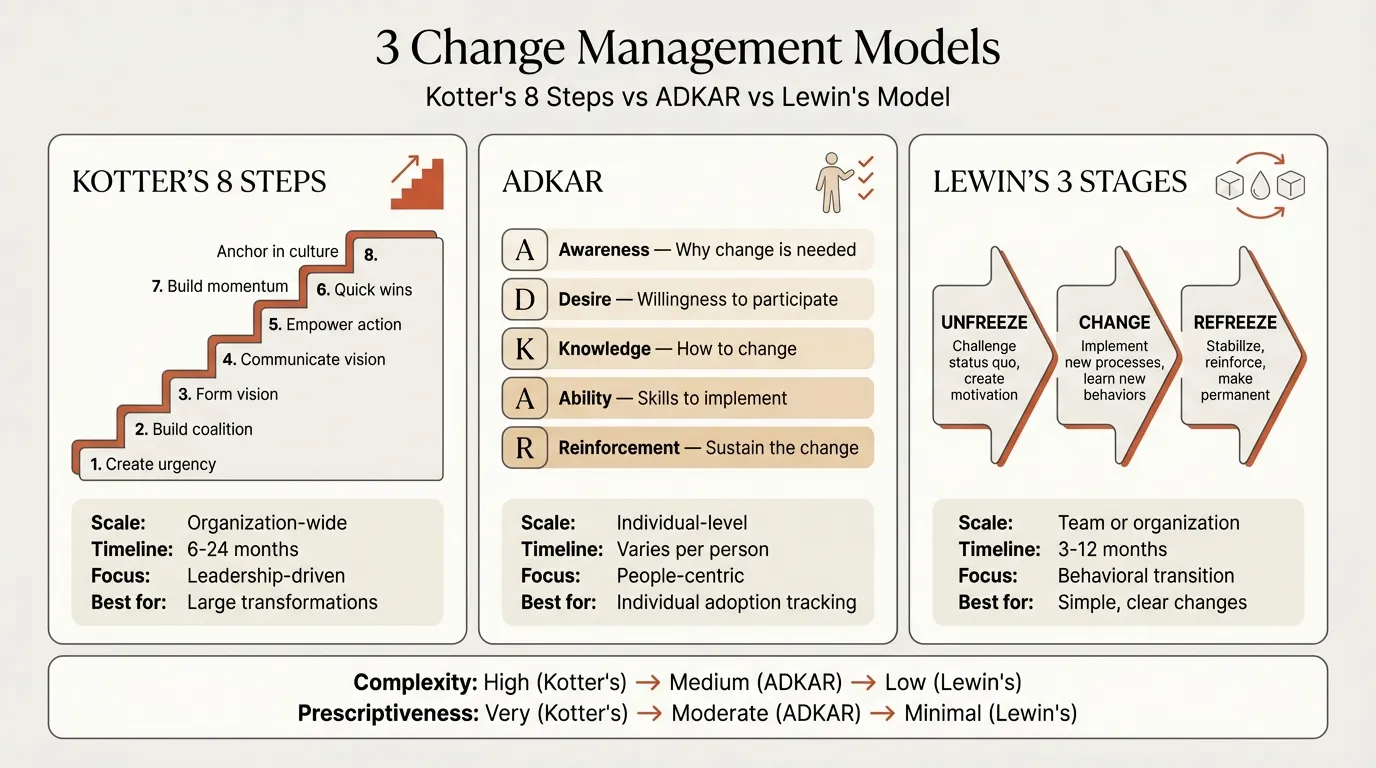

After leading or advising on 60+ transformation programs, we have found that three models -- Kotter, ADKAR, and Lewin -- account for roughly 80% of the change management approaches used in practice. The remaining models (McKinsey 7-S, Bridges, Nudge Theory) serve important supporting roles but are rarely used as primary frameworks. For guidance on choosing between these models and combining them effectively, see our Change Management Frameworks guide.

This post goes deep on the three dominant models with worked case studies, then provides a comparison reference for all six. For the broader strategic toolkit these fit into, see our Strategic Frameworks Guide.

Kotter's 8-Step Process: Post-Merger Integration Case Study#

John Kotter's model, first outlined in his seminal Harvard Business Review article, is the most widely referenced change management framework in consulting. The 8 steps -- create urgency, form a guiding coalition, develop vision and strategy, communicate the vision, empower broad-based action, generate short-term wins, consolidate gains, and anchor changes in culture -- are straightforward on paper. In practice, each step conceals execution challenges that only surface at scale.

The scenario#

A $400M acquisition of a regional competitor in professional services. Combined headcount: 2,300. The strategic rationale was clear -- 23% of customers were in overlapping service areas, and a major competitor was already targeting those accounts with bundled pricing. Integration timeline: 18 months to full operational integration.

How the 8 steps played out#

Step 1 -- Create urgency. The integration team presented data showing that the competitor had won 11 shared accounts worth $8.2M in annual revenue over the previous two quarters. The message was concrete: "We are losing customers today, not theoretically in the future." This worked better than generic urgency statements because it named the competitor and quantified the loss.

Step 2 -- Form a guiding coalition. The initial coalition included 6 senior leaders -- all from the acquiring company. By week 4, resistance from the acquired company's middle management had stalled three workstreams. The coalition was expanded to include 4 directors from the acquired firm, chosen not by seniority but by informal influence. One was a 15-year veteran whom the acquired company's staff trusted more than their own executives.

Step 3 -- Develop vision and strategy. The vision statement went through 7 drafts. The final version was one sentence: "One team serving shared clients with integrated capabilities that neither company could offer alone." The strategy document mapped 14 integration workstreams, each with a RACI matrix that crossed company boundaries.

Step 4 -- Communicate the vision. Weekly town halls for the first 8 weeks, then biweekly. Every town hall opened with a customer win that demonstrated the integrated model working. By week 6, the team had identified 3 early joint client wins worth $1.4M that validated the merger thesis.

Step 5 -- Empower broad-based action. This is where the integration nearly failed. The acquired company's CRM system could not share data with the acquirer's platform, and IT had no budget for integration until Q3. For 11 weeks, account managers maintained duplicate records in both systems. Morale cratered. The fix was an emergency $180K allocation for a middleware connector that went live in week 14. The lesson: structural barriers at step 5 will undo all the coalition-building and vision work from steps 1-4 if not resolved with urgency. For a structured approach to identifying these barriers across stakeholder groups, see our Stakeholder Management Guide.

Step 6 -- Generate short-term wins. The team targeted three quick wins: a unified client service agreement template (delivered in month 2), a combined proposal team that won a $3.2M competitive bid in month 4, and a shared training program that launched in month 5. Each win was publicized in the biweekly town hall.

Steps 7-8 -- Consolidate gains and anchor in culture. By month 12, customer retention in the overlap zone had improved from 71% to 89%. The integration team was not disbanded until month 18, which prevented the premature victory declaration that Kotter himself identifies as the most common failure mode. Performance reviews were updated to include cross-company collaboration metrics, and promotion criteria explicitly rewarded integration behaviors.

Outcome: 18-month integration completed on schedule. Revenue synergies hit $14.2M against a $12M target. Voluntary attrition in integration-affected roles stayed at 9%, below the 15% threshold that signals a failing integration.

ADKAR Model: ERP Implementation Case Study#

Prosci's ADKAR model tracks individual adoption through five sequential stages: Awareness, Desire, Knowledge, Ability, and Reinforcement. Its power is diagnostic -- when adoption stalls, ADKAR identifies exactly where users are stuck rather than treating resistance as a monolithic problem.

The scenario#

An ERP implementation (SAP S/4HANA migration) across 1,200 users in a mid-market manufacturing company. Timeline: 9 months from kickoff to go-live. The previous ERP had been in place for 12 years. Many users had never experienced a major system change.

The ADKAR tracking dashboard#

The change team surveyed a representative sample of 200 users at weeks 4, 8, and 12 post-kickoff, scoring each ADKAR element on a 1-5 scale. Users scoring 4 or above on an element were considered to have "reached" that stage.

| ADKAR Element | Week 4 | Week 8 | Week 12 (post-intervention) |

|---|---|---|---|

| Awareness | 78% | 91% | 94% |

| Desire | 52% | 61% | 73% |

| Knowledge | 19% | 34% | 68% |

| Ability | 8% | 22% | 51% |

| Reinforcement | N/A | N/A | 31% |

What the data revealed#

Week 4 diagnosis: Awareness was healthy at 78%, but Desire lagged at 52%. Nearly half the user base understood the change was happening but did not want it. Root cause analysis revealed that the communication campaign had explained what was changing but not what's in it for me. Warehouse floor managers, in particular, saw only disruption and no personal benefit.

The Desire intervention (weeks 5-7): Rather than more top-down messaging, the change team identified 8 "system champions" -- floor managers and power users who had participated in pilot testing and genuinely liked the new system. These champions ran 45-minute sessions with their peer groups, demonstrating specific workflow improvements: purchase order approvals dropping from 6 clicks to 2, real-time inventory visibility replacing a manual count that consumed 3 hours per week. Desire scores jumped from 52% to 61% by week 8.

Week 8 diagnosis: Knowledge was the critical bottleneck. Only 34% of users had reached sufficient Knowledge scores, and training sessions were running 3 weeks behind schedule because the initial plan assumed 2-hour classroom sessions would be enough. They were not. Users needed hands-on practice in a sandbox environment, not lectures.

The Knowledge intervention (weeks 9-11): Training was restructured from classroom lectures to role-based sandbox labs. Each user received 4 hours of hands-on practice with their specific workflows, not generic system overviews. Training completion with demonstrated competency (not just attendance) became a requirement before go-live access was granted. Knowledge scores doubled from 34% to 68% by week 12.

Week 12 and beyond: Ability -- actually performing the work in the live system -- reached 51% by week 12, which was below target. The go-live was delayed by 3 weeks to allow additional practice time. Post-go-live, a "floor support" model placed trained super-users on each shift for the first 30 days, resolving questions in real time rather than through a help desk queue.

Outcome: 90-day post-go-live system utilization reached 87%. Help desk tickets peaked at 340 in week 1 and dropped to 45 by week 6. The previous ERP migration at a sister facility (done without ADKAR tracking) had taken 5 months to reach 70% utilization.

Continue reading: Agenda Slide PowerPoint · Flowchart in PowerPoint · Pitch Deck Guide

Build consulting slides in seconds

Describe what you need. AI generates structured, polished slides — charts and visuals included.

Lewin's 3-Stage Model: Cultural Transformation Case Study#

Kurt Lewin's Unfreeze-Change-Refreeze model from the 1940s is the simplest change management framework. Its three stages -- destabilize the current state, introduce new behaviors, and stabilize the new state -- capture a fundamental truth: people resist change because the status quo feels safe, and you must make the status quo feel unacceptable before new behaviors will take hold.

The scenario#

A 500-person technology company shifting from a top-down, approval-heavy engineering culture to a distributed decision-making model. No technology change. No structural reorganization. Pure behavioral and cultural shift. The catalyst: an employee engagement survey showed 72% dissatisfaction with decision-making speed, and the company had lost 3 major product bids in the previous year because competitors shipped faster.

Unfreeze (months 1-4)#

The unfreezing phase required making the cost of the current culture visible and visceral.

Data campaign: Leadership published the engagement survey results unedited -- including verbatim comments like "I have to get 4 approvals to change a button color" and "By the time we decide, the market has moved." The 72% dissatisfaction number became a rallying point.

Town halls with customer evidence: Three all-hands sessions featured recorded interviews with customers who had chosen competitors. One customer stated directly: "We liked your product better, but your competitor delivered in 6 weeks. You quoted 14." That recording was replayed more than any internal presentation.

Anonymous decision audit: The change team tracked 50 representative decisions over 4 weeks and published the results. Average time from proposal to approval: 11 business days. Average number of approval steps: 3.4. Number of decisions where the final approver changed anything substantive: 7 out of 50 (14%). The data made the case that most approvals added delay without adding value.

Change (months 5-12)#

Decision rights framework: Teams were given explicit decision authority for categories that previously required escalation. Engineering teams could approve feature changes under 40 hours of estimated effort. Design teams could approve UX changes without VP sign-off. Spending decisions under $5,000 no longer required finance review.

Manager retraining: The hardest part. 38 managers had built their identities around being approval gatekeepers. Six coaching sessions over 3 months reframed the manager role from "approver" to "context provider" -- managers set the strategic boundaries, and teams made decisions within them.

Visible metrics: A "decision velocity" dashboard tracked average time-to-decision weekly. It was displayed on monitors in common areas. In month 5, the average was 9.8 days. By month 9, it had dropped to 4.1 days.

Refreeze (months 13-18)#

Structural reinforcement: Performance reviews were updated to include "decision-making effectiveness" as a rated dimension. Promotion criteria for managers explicitly included "empowers team decisions" as a requirement. Two managers who consistently re-centralized decisions were given coaching; one adapted, one was moved to an individual contributor role.

Measurement: Employee engagement scores on decision-making satisfaction rose from 3.2 to 4.1 (out of 5.0) over 18 months. Product delivery cycle time decreased from an average of 14.2 weeks to 8.7 weeks. The company won 2 of the next 4 competitive bids where speed was a deciding factor.

Outcome: The cultural shift held because the refreeze phase was treated as seriously as the unfreeze phase. Metrics, incentives, and personnel decisions all reinforced the new behavior pattern.

The Other Three Models: Quick Reference#

The following models serve important roles but are more commonly used as supporting frameworks alongside Kotter, ADKAR, or Lewin.

McKinsey 7-S Framework maps seven interdependent organizational elements -- strategy, structure, systems, shared values, skills, style, and staff -- and identifies misalignment. It is a diagnostic tool, not a step-by-step change process. Most practitioners pair 7-S with Kotter or ADKAR for the execution layer. Best for structural reorganizations and post-merger integrations. For a detailed breakdown, see McKinsey 7S Framework.

Bridges' Transition Model focuses on the psychological experience of change rather than the change itself. Its three phases -- Ending (acknowledging loss), Neutral Zone (navigating ambiguity), and New Beginning (internalizing the new identity) -- fill the emotional gap that process-focused models ignore. Best used alongside a structured model like Kotter when the change involves layoffs, role eliminations, or cultural overhauls.

Nudge Theory applies behavioral economics to change management by reshaping the environment so that desired behaviors become the path of least resistance. Defaults, social proof, and friction reduction drive adoption without mandates. Best for low-stakes behavioral changes like tool adoption, compliance behaviors, and incremental process adjustments. Insufficient for high-stakes transformations that require emotional commitment.

Change Management Models Comparison Table#

| Model | Best For | Complexity | Time Horizon | Focus Area | Approach |

|---|---|---|---|---|---|

| Kotter's 8-Step | Cultural change, mergers, strategic pivots | High | 12-36 months | Leadership and organizational momentum | Top-down |

| ADKAR | Technology rollouts, process changes | Medium | 3-18 months | Individual adoption tracking | Bottom-up |

| Lewin's 3-Stage | Well-defined, bounded changes | Low | 3-12 months | Group psychology and stability | Top-down |

| McKinsey 7-S | Structural reorgs, operating model changes | High | 6-24 months | Organizational alignment across 7 dimensions | Diagnostic |

| Bridges' Transition | Emotionally charged transformations | Medium | 6-18 months | Psychological transition and identity | People-centered |

| Nudge Theory | Behavioral adoption, compliance | Low | 1-6 months | Environment design and defaults | Environmental |

Applying Change Management Models to Presentations#

The most effective change management presentations share three characteristics:

- A single slide that maps the model to the specific transformation -- not a textbook diagram, but the framework populated with the organization's actual phases, milestones, and metrics (like the ADKAR tracking dashboard above)

- A stakeholder-specific communication plan shown in a RACI matrix or Gantt-style timeline

- Progress tracking dashboards that show adoption metrics at regular intervals

For building these slides efficiently, tools like Deckary provide consulting-grade Gantt charts and structured layouts that make populating framework slides significantly faster than native PowerPoint workarounds.

Key Takeaways#

- Kotter works for large-scale, leadership-driven transformations but demands sustained coalition engagement and rapid removal of structural barriers (the CRM integration failure in the post-merger case nearly derailed the entire effort).

- ADKAR's diagnostic power is its greatest strength. The ERP case study shows that aggregate adoption numbers hide specific bottlenecks -- Knowledge was the binding constraint, not Awareness or Desire.

- Lewin's simplicity is deceptive. The cultural transformation case required 4 months of deliberate unfreezing before any behavioral change was introduced, and 6 months of active refreezing to prevent reversion.

- Most complex transformations combine models. Use Kotter for the leadership-level agenda and ADKAR for individual adoption tracking. See our Change Management Frameworks guide for detailed combination strategies.

- McKinsey 7-S, Bridges, and Nudge Theory are valuable supporting models -- use each for the specific layer of change it was designed to address.

For the broader strategic toolkit that these change management models fit into, see our Strategic Frameworks Guide.

Build consulting slides in seconds

Describe what you need. AI generates structured, polished slides — charts and visuals included.

Try Free